A new phone

7th August 2009

For someone with an more than passing interest in technology, it may come as a surprise to you to learn that mobile telephony isn’t one of my strong points at all. That’s all the more marked when you cast your eye back over the developments in mobile telephone technology in recent years. Admittedly, until I subscribed to RSS feeds from the likes of TechRadar, the computing side of the area didn’t pass my way very much at all. That act has has alerted me to the now unmissable fact that mobile phones have become portable small computers, regardless of whether it is an offering from Apple or not. After the last few years, no one can say that things haven’t got really interesting.

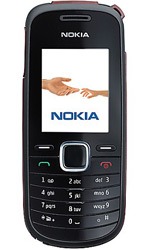

In contrast to all the excitement, I only got my first phone in 2000 and stuck with it since and that was despite its scuffs and scratches along with its battery life troubles. Part of the reason for this is a certain blindness induced by having the thing on a monthly contract. As that is not sufficient to hide away the option of buying a phone on its own, then there’s the whole pay as you go arena too. The level of choice is such that packages such as those mentioned gain more prominence and potentially stop things in their tracks but I surmounted the perceived obstacles to buy a Nokia 1661 online from the Carphone Warehouse and collect it from the nearest store. The new replacement for my old Motorola is nothing flashy. Other phones may have nice stuff like an on-board camera or web access but I went down the route of sticking with basic functionality, albeit in a modern package with a colour screen. Still, for around £35, I got something that adds niceties like an alarm clock and a radio to the more bread and butter operations like making and taking phone calls and text messaging. Pay as you go may have got me the phone for less but I didn’t need a new phone number since I planned to slot in my old SIM card anyway; incidentally, the latter operation was a doddle once I got my brain into gear.

Now that I have replaced my mobile handset like I would for my land-line phone, I am left wondering why I dallied over the task for as long as I have. It may be that the combination of massive choice and a myriad of packages that didn’t appeal to me stalled things. With an increased awareness of the technology and options like buying a SIM card on its own, I can buy with a little more confidence now. Those fancier phones may tempt but I’ll be treating them as a nice to have rather than essential purchases. Saying all of this, the old handset isn’t going into the bin just yet though. It may be worn and worthless but its tri-band capabilities (I cannot vouch for the Nokia on this front) may make it a useful back up for international travel. The upgrade has given me added confidence for trying again when needs must but there is no rush and that probability of my developing an enthusiasm for fancy handsets is no higher.

Buying in Britain

15th June 2010

Under this heading, I have collected my listings of places on the web where you can purchase computing, mobile telephony and photographic equipment. As per the title, these apply to the U.K. because I have yet to import anything from beyond the shores of the island where I am writing these words. While I cannot claim to have patronised all of the emporia that are listed, the hope is that there’s enough there to enable some price checking and sourcing of goods that otherwise may not have wide availability. You never know but they even might come in useful.

As you might have guessed from the names, we are not just limiting ourselves to computers, phones and cameras but include the accessories too. In fact, since the explosion of mobile telephony and digital photography, a major crossover has development between formerly very separate business areas. The fact that many mobile phones carry the same processing power as desktop PC’s did not so many years over a decade ago rather forces the issue.

When film held sway, photography was a very analogue affair with most of the magic being made in the camera and in printing labs, including personal darkrooms. A scanner was an essential item for digitising any images. Film still lives on but the convenience of digital capture has won me over, particularly with its thrusting the processing back on my shoulders instead of paying someone else to do it. That costs time instead of money but the latter quantity is less plentiful this times. Just as my interest in desktop computing continues to persist into an age when everything is getting more and more mobile, the art of photography hasn’t been automated out of existence because it always has been the photographer that was the most important actor in the discipline.

Then, there’s the upsurge in mobile computing. While I may have been late to the game with my purchase of a BlackBerry and an Asus Eee PC in the last few months, it’s not unclear where things are going with the gadgets becoming more and more appealing. Now, it’s hard to believe that it was just under a decade ago when most mobile phones were for making and taking voice calls along with sending text messages. Yes, PDA’s were about but they didn’t seem to be as commonplace as smartphones are today. Still, it seems that mobile phone technology is going to continue evolving so it’ll be interesting to see where things go, especially with the advent of cloud computing.

With all of the changes that are in train, desktop computing is likely to continue in one form or another. We may be in the heart of a technological revolution brought on by mobile and cloud computing but there’s still something more productive about sitting at a desktop and working a machine with a mouse and keyboard. My hunch is that desktop computing is going to itself alter over the next few years with touchscreen monitors already becoming available. That development could get interesting if combined on a full specification laptop that rarely spends any time away from being plugged into a power socket.

All in all, it feels as if we are moving through a period of massive change in the world around us after a period of what felt like prolonged stability. Even in an economic downturn, it feels as if all hell has broken loose in the world of technology with old certainties being overthrown and it looks as if it is going to stay that way for a while. There may be a time of consolidation yet but the trick at the moment is control any temptations until we find any use for new shiny things that attract massive attention. Then, the listings that I have collected here might have a use.

Limiting Google Drive upload & synchronisation speeds using Trickle

9th October 2021Having had a mishap that lost me some photos in the early days of my dalliance with digital photography, I have been far more careful since then and that now applies to other files as well. Doing regular backups is a must that you find reiterated by many different authors and the current computing climate makes doing that more vital than it ever was.

So, as well as having various local backups, I also have remote ones in the form of OneDrive, Dropbox and Google Drive. These more correctly are file synchronisation services but disciplined use can make them useful as additional storage facilities in the interests of maintaining added resilience. There also are dedicated backup services that I have seen reviewed in the likes of PC Pro magazine but I have to make use of those.

Insync

Part of my process for dealing with new digital photo files is to back them up to Google Drive and I did that with a Windows client in the early days but then moved to Insync running on Linux Mint. One drawback to the approach is that this hogs the upload bandwidth of an internet connection that has yet to move to fibre from copper cabling. Having fibre connections to a local cabinet helps but a 100 KiB/s upload speed is easily overwhelmed and digital photo file sizes keep increasing. It does not help that I insist on using more flexible raw formats like DNG, CR2 or CR3 either.

Making fewer images could help to cut the load but I still come away from an excursion with many files because I get so besotted with my surroundings. This means that upload sessions take numerous hours and can extend across calendar days. Ultimately, this makes my internet connection far less usable so I want to throttle upload speed much like what is possible in the Transmission BitTorrent client or in the Dropbox client. Unfortunately, this is not available in Insync so I have tried using the trickle command instead and an example is below:

trickle -d 2000 -u 50 insync

Here, the upload speed is limited to 50 KiB/s while the download speed is limited to 2000 KiB/s. In my case, the latter of these hardly matters while the former leaves me with acceptable internet usability. Insync does not work smoothly with this, however, so occasional restarts are needed to keep file uploads progressing and CPU load also is higher. As rough as the user experience feels, uploads can continue in parallel with other work.

gdrive

One other option that I am exploring is the use of the command-line tool gdrive and this appears to work well with trickle. After downloading and installing the tool, getting going is a matter of issuing the following command and following the instructions:

gdrive about

On web servers, I even have the tool backing up things to Google Drive on a scheduled basis. Because of a Google Drive limitation that I have encountered not only with gdrive but also with Insync and Google’s own Windows Google Drive client, synchronisation only can happen with two new folders, one local and the other remote. Handily, gdrive supports the usual bash style commands for working with remote directories so something like the following will create a directory on Google Drive:

gdrive mkdir ttdc [ID for parent folder]

Here, the ID for the parent folder may be omitted but it can be obtained by going to Google Drive online and getting a link location by right-clicking on a folder and choosing the appropriate context menu item. This gets you something like the following and the required identifier is found between the last slash and the first question mark in the address string (so as not to share any real links, I made the address more general below):

https://drive.google.com/drive/folders/[remote folder ID]?usp=sharing

Then, synchronisation uses a command like the following:

gdrive sync upload [local folder or file path] [remote folder ID]

There also is the option to do a one-way upload and this is the form of the command used:

gdrive upload [local folder or file path] -p [remote folder ID]

Because every file or folder object has its own ID on Google Drive, it is possible to create two objects on there that appear to have the same name though that is sure to cause confusion even if you know what is happening. It is possible in each of the above to throttle them using trickle as well:

trickle -d 2000 -u 50 gdrive sync upload [local folder or file path] [remote folder ID]

trickle -d 2000 -u 50 gdrive upload [local folder or file path] -p [remote folder ID]

Handily, this works without the added drama seen with Insync and lends itself to scripting as well so it could be something that I will incorporate into my current workflow. One thing that needs to be watched is file upload failures but there may be ways to catch those and retry them so that would another thing that needs doing. This is built into Insync and it would be a learning opportunity if I was to stick with gdrive instead.

The wonders of mod_rewrite

24th June 2007When I wrote about tidying dynamic URL’s a little while back, I had no inkling that that would be a second part to the tale. My discovery of mod_rewrite, an Apache module that facilitates URL translation. The effect is that one URL is presented to the user in the browser address bar, and the exact same URL is also seen by search engines, while another is passed to the server for processing. It might sound like subterfuge but it works very well once you manage to get it set up properly. The web host for my hillwalking blog/photo gallery has everything configured such it is ready to go but the same did not apply to the offline Apache 2.2.x server that I have going on my own Windows XP box. There were two parts to getting it working there:

- Activating mod-rewrite on the server: this is as easy as uncommenting a line in the httpd.conf file for the site (the line in question is: LoadModule rewrite_module modules/mod_rewrite.so).

- Ensuring that the .htaccess file in the root of the web server directory is active. You need to set the values of the AllowOverride directives for the server root and CGI directories to All so that .htaccess is active. Not doing it for the latter will result in the an error beginning with the following: Options FollowSymLinks or SymLinksIfOwnerMatch is off which implies that RewriteRule directive is forbidden. Having Allow from All set for the required directories is another option to consider when you see errors like that.

Once you have got the above sorted, adding this line to .htaccess: RewriteEngine On. Preceding it with an Options directive to ensure that FollowSymLinks and SymLinksIfOwnerMatch are switched on does no harm at all and may even be needed to get things running. That done, you can set about putting mod_write to work with lines like this:

RewriteRule ^pages/(.*)/?$ pages.php?query=$1

The effect of this is to take http://www.website.com/pages/input and convert it into a form for action by the server; in this case, that is http://www.website.com/pages.php?query=input. Anything contained by a bracket is assigned to the value of a system-named variable. If you have several bracketed sections, they are assigned to sequentially numbered variables as follows: $1 for the first, $2 for the second and so on. It’s all good stuff when you get it going and not only does it make things look much neater but it also possesses an advantage when it comes to future-proofing too. Web addresses can be kept constant over time, even if things change behind the scenes. It means that returning visitors will find what they saw the last time that they visited and surely must ensure good karma in eyes of those all important search engines.

Adding a new domain or subdomain to an SSL certificate using Certbot

11th June 2019On checking the Site Health page of a WordPress blog, I saw errors that pointed to a problem with its SSL set up. The www subdomain was not included in the site’s certificate and was causing PHP errors as a result though they had no major effect on what visitors saw. Still, it was best to get rid of them so I needed to update the certificate as needed. Execution of a command like the following did the job:

sudo certbot --expand -d existing.com,www.example.com

Using a Let’s Encrypt certificate meant that I could use the certbot command since that already was installed on the server. The --expand and -d switches ensured that the listed domains were added to the certificate to sort out the observed problem. In the above, a dummy domain name is used but this was replaced by the real one to produce the desired effect and make things as they should have been.

Using .htaccess to control hotlinking

10th October 2020There are times when blogs cease to exist and the only place to find the content is on the Wayback Machine. Even then, it is in danger of being lost completely. One such example is the subject of this post.

Though this website makes use of the facilities of Cloudflare for various functions that include the blocking of image hotlinking, the same outcome can be achieved using .htaccess files on Apache web servers. It may work on Nginx to a point too but there are other configuration files that ought to be updated instead of using a .htaccess when some frown upon the approach. In any case, the lines that need adding to .htaccess are listed below though the web address needs to include your own domain in place of the dummy example provided:

RewriteEngine on

RewriteCond %{HTTP_REFERER} !^$

RewriteCond %{HTTP_REFERER} !^http://(www\.)?yourdomain.com(/)?.*$ [NC]

RewriteRule .*\.(gif|jpe?g|png|bmp)$ [F,NC]

The first line turns on the mod_rewrite engine and you may have that done anyway. Of course, the module needs enabling in your Apache configuration for this to work and you have to be allowed to perform the required action as well. This means changing the Apache configuration files. The next pair of lines look at the HTTP referer strings and the third one only allows images to be served from your own web domain and not others. To add more, you need to copy the third line and change the web address accordingly. Any new lines need to precede the last line that defines the file extensions that are to be blocked to other web addresses.

RewriteEngine on

RewriteCond %{HTTP_REFERER} !^$

RewriteCond %{HTTP_REFERER} !^http://(www\.)?yourdomain.com(/)?.*$ [NC]

RewriteRule \.(gif|jpe?g|png|bmp)$ /images/image.gif [L,NC]

Another variant of the previous code involves changing the last line to display a default image showing others what is happening. That may not reduce the bandwidth usage as much as complete blocking but it may be useful for telling others what is happening.

Some online writing tools

15th October 2021Every week, I get an email newsletter from Woody’s Office Watch. This was something to which I started subscribing in the 1990’s but I took a break from it for a good while for reasons that I cannot recall and returned to it only in recent years. This week’s issue featured a list of online paraphrasing tools that are part of what is offered by Quillbot, Paraphraser, Dupli Checker and Pre Post Seo. Each got their own reviews in the newsletter so I will just outline other features in this posting.

In Quillbot’s case, the toolkit includes a grammar checker, summary generator, and citation generator. In addition to the online offering, there are extensions for Microsoft Word, Google Chrome, and Google Docs. In addition to the free version, a paid subscription option is available.

In spite of the name, Paraphraser is about more than what the title purports to do. There is article rewriting, plagiarism checking, grammar checking and text summarisation. Because there is no premium version, the offering is funded by advertising and it will not work with an ad blocker enabled. The mention of plagiarism suggests a perhaps murkier side to writing that cuts both ways: one is to avoid copying other work while another is the avoidance of groundless accusations of copying.

It was appear that the main role of Dupli Checker is to avoid accusations of plagiarism by checking what you write yet there is a grammar checker as well as a paraphrasing tool on there too. When I tried it, the English that it produced looked a little convoluted and there is a lack of fluency in what is written on its website as well. Together with a free offering that is supported by ads that were not blocked by my ad blocker, there are premium subscriptions too.

In web publishing, they say that content is king so the appearance of an option using the acronym for Search Engine Optimisation in it name may not be as strange as it might as first glance. There are numerous tools here with both free and paid tiers of service. While paraphrasing and plagiarism checking get top billing in the main menu on the home page, further inspection reveals that there is a lot more to check on this site.

In writing, inspiration is a fleeting and ephemeral quantity so anything that helps with this has to be of interest. While any rewriting of initial content may appear less smooth than the starting point, any help with the creation process cannot go amiss. For that reason alone, I might be tempted to try these tools from time to time and they might assist with proof reading as well because that can be a hit and miss affair for some.

Installing VMware Player 4.04 on Linux Mint 13

15th July 2012Curiosity about the Release Preview of Windows 8 saw me running into bother when trying to see what it’s like in a VirtualBox VM. While doing some investigations on the web, I saw VMware Player being suggested as an alternative. Before discovering VirtualBox, I did have a licence for VMware Workstation and was interested in seeing what Player would have to offer. The, it was limited to running virtual machines that were created using Workstation. Now, it can create and manage them itself and without any need to pay for the tool either. Registration on VMware’s website is a must for downloading it though but that’s no monetary cost.

One I had downloaded Player from the website, I needed to install it on my machine. There are Linux and Windows versions and it was the former that I needed and there are 32-bit and 64-bit variants so you need to know what your system is running. With the file downloaded, you need to set it as executable and the following command should do the trick once you are in the right directory:

chmod +x VMware-Player-4.0.4-744019.i386.bundle

Then, it needs execution as a superuser. With sudo access for my user account, it was a matter of issuing the following command and working through the installation screens to instate the Player software on the system:

sudo ./VMware-Player-4.0.4-744019.i386.bundle

Those screens proved easy for me to follow so life would have been good if that were all that was needed to get Player working on my PC. Having Linux Mint 13 means that the kernel is of the 3,2 stock and that means using a patch to finish off the Player installation because the required VMware kernel modules seem to silently fail to compile during the installation process. This only manifests itself when you attempt to start Player afterwards to find a module installation screen appear. That wouldn’t be an issue of itself were it not for the compilation failure of the vmnet module and subsequent inability to start VMware services on the machine. There is a prompt to peer into the log file for the operation and that is a little uninformative for the non-specialist.

Rummaging around the web brought me to the requisite patch and it will work for Player 4.0.3 and Workstation 8.0.2 by default. Doing some tweaking allowed me to make it work for Player 4.04 too. My first step was to extract the contents of the tarball to /tmp where I could edit patch-modules_3.2.0.sh. Line 8 was changed to the following:

plreqver=4.0.4

With the amendment saved, it was time to execute the shell script as a superuser having made it executable before hand. This can be accomplished using the following command:

chmod +x patch-modules_3.2.0.sh && sudo ./patch-modules_3.2.0.sh

With that completed successfully, VMware Player ran as it should. An installation of Windows 8 into a new VM ran very smoothly and I was impressed with performance and responsiveness of the operating system within a Player VM. There are a few caveats though. First, it doesn’t run at all well with VMware Tools so it’s best to leave them uninstalled and it doesn’t seem to need them either; it was possible to set the resolution to the same as my screen and use the CTRL+ALT+ENTER shortcut to drop in and out of full screen mode anyway. Second, the unattended Windows installation wasn’t the way forward for setting up the VM but it was no big deal to have that experiment thwarted. The feature remains an interesting one though.

With Windows 8 running so well in Player, I was reminded of the sluggish nature of my Windows 7 VM and an issue with a Fedora 17 one too. The result was that I migrated the Windows 7 VM from VirtualBox to VMware and all is so much more responsive. Getting it there took not a little tinkering so that’s a story for another entry. On the basis of my experiences so far, I reckon that VMware Player will remain useful to me for a little while yet. Resolving the installation difficulty was worth that extra effort.

More Linux Distributions

21st September 2012

If a certain Richard Stallman had his way, Linux would be called GNU/Linux because he wants GNU to have some of the credit, but we’re lazy creatures and we all call it Linux instead. What still amazes me is the number of Linux distributions that are out there. This list captures those that do not fit into other lists that you can find in the sidebar, so do look at the others as well.

Many fit into the desktop and server computing paradigms while a minority are very distinctive. It is easier to write about the latter than the former, though personal experiences do add to any narrative. It is tempting to think that everything has become static after more than thirty years, yet that may be foolish given the ongoing flux in the world of technology. Only change is ever a constant presence.

More in the Way of Privacy

The controversy about security agencies eavesdropping on internet communications has upset some and here are some distros offering anonymity and privacy. Of course, none of these should be used for unlawful purposes since there are those in less liberal countries who need invisibility to speak their minds.

It is harder and harder to create a Linux distro that is very different from the rest, but this one uses application virtualisation for added security. You can organise your software into different domains so that you work more securely when moving data between applications from different domains.

There is more than a hint of privacy-mindedness in this distro when you look long enough at what it offers. Cinnamon, MATE and Xfce desktop environments are part of the offer and there is added software for extra privacy and security.

This is an option for those who are worried about being tracked online. All internet connections are sent via the Tor network and it is run exclusively as a live distro from CD, DVD or USB stick drive too, so no trace is left on any PC. The basis is Debian and the distro’s name is an acronym: The Amnesiac Incognito Live System. For us living in a democratic country, the effort may seem excessive but that changes in other places where folk are not so fortunate. The use of Tor may not be perfect but it should help in combination with the use of different sessions for different tasks and encrypting any files. There even is an option to make the desktop appear like that of Windows XP for extra discreteness of use.

Most Linux distros that have enhanced security and anonymity as a feature are not installable on a PC, but that exactly is what’s unique about Whonix. It’s based on Debian but all internet connections go via the Tor network. The latter is called Whonix-Gateway with Whonix-Workstation being what you use to work on your system. It may sound like being overly careful but it has me intrigued.

Entertainment

In many ways, these are appliance distros for anyone who just wants an install-it-and-go approach to things. That works better with dedicated devices than with multipurpose machines, so that is one thing that needs to be kept in mind.

The idea behind this offering is what it offers console gamers. Legacy games and peripherals will work and there even is support for Raspberry Pi as well.

The main purpose of this distro is to offer a home for the KODI entertainment centre on PC and Raspberry Pi devices. It follows from the now defunct OpenELEC project, which ran into trouble when developers’ voices were not given a hearing.

The acronym stands for Open-Source Media Centre and there is KODI here too. Though the distro also is based on Debian, one is tempted to wonder why anyone would not just install that and install KODI on top of it. The answer possibly has something got to do with added user-friendliness for those who do not need to deal with such things.

Mandriva Offshoots

Mandrake once was a spin of Red Hat with a more user-friendly focus. In the days before the appearance of Ubuntu, it would have been a choice for those not wanting to overcome obstacles such as a level of hardware support that was much less than what we have today. Later, Mandrake became Mandriva following litigation and the acquisition of Conectiva in 2005. The organisation has declined since those heady days and it became defunct during 2015. Its legacy continues though in the form of two spin-off projects, so all the work of forebears has not been lost.

It was the uncertainty surrounding the future of Mandriva that originally caused this project to be started. Beginnings have been promising, so this is a one to watch, though you have to wonder if the now community-based OpenMandriva is stealing some of its limelight.

Of the pair that is listed here, it is OpenMandriva which is a continuation of the now-defunct Mandriva. Seeing how things progress for a project with user-friendliness at its heart will be of interest in these days when Debian, Ubuntu and Linux Mint are so pervasive. Even with those, there are KDE options, so there is a challenge in place.

Anything Russian may not be everyone’s choice given the state of world affairs at the time of writing, yet this still is an offshoot of Mandriva so it gets a mention in this list. Desktop environment options include KDE, XFCE and LXQt and there are various use cases covered by a range of solutions.

Others

Not every distro falls in the above categories, and some that you find here may surprise you. There are some better-known names like openSUSE that go their way.

Aside from the founder’s dislike of ISO disk images for whatever reason, this distro has its own eccentricities. For example, it is container-friendly, runs in memory as root and much more. This is branded as an experimental distro, and it is that in many ways.

This project creates respins of openSUSE for the sake of a more refined experience. For instance, there are live booting ISO images as well as inclusion of media codecs. There is plenty of choice too when it comes to desktop environments.

From what I have seen, this project seems to be supporting the same needs as Arch, albeit with all software needing to be compiled, so there’s more of a DIY approach. The wiki also comes in handy for those users.

Billing itself as a lean independent distribution focussing on QT and KDE, this is built from the ground up without any dependence on other distros. Some tools, like pacman, naturally come from elsewhere in this otherwise standalone offering.

Here is another distro apart from Ubuntu that has an African name, the Zulu for big chief this time around. It came to my notice among the pages of the now defunct Micro Mart magazine and uses MATE, XFCE, Enlightenment and KDE as its desktop environment choices.

SuSE Linux was one of the first Linux distros that I started to explore and I even had it loaded on my home PC as a secondary operating system for quite a while too before my attention went elsewhere. Only for a PC Plus cover-mounted CD, it never might have discovered it and it bested Red Hat, which was as prominent then, as Fedora is today. When SuSE fell into Novell’s hands, it became both openSUSE and SuSE Linux Enterprise Edition. The former is the community and the latter is what Novell, now itself an Attachmate Group company, offers to business customers. As it happens, I continue to keep an eye on openSUSE and even had it on a secondary PC before font resolution deficiencies had me looking elsewhere. While it’s best known for its KDE variant, there is a GNOME one too and it is this that I have been examining.

There was a time when this was being touted as an Ubuntu killer but it never seems to have made good on that promise. Recent troubles within the project haven’t helped either, especially with a long wait between releases.

This Turkish distro recently got reviewed in Linux Format and they were not satisfied with its documentation. It does not help that the website is not in English, so you need a translation tool of your choosing for this one.

Though there also is a spin using the MATE desktop environment, this distro is perhaps better known as the home for the Budgie desktop environment. All of this is for computing and not its business or enterprise counterpart. There is nothing to say against that and may make it feel a little more friendly.

The name sounded similar for some reason and I reckon that’s because Samsung has smartphones running Tizen on sale. The whole point of the project is to power mobile computing platforms with only the mention of netbooks sullying an otherwise non-PC target market that includes tablets and TV’s. It’s overseen by the Linux Foundation too.

Command line mapping of network drives

5th September 2007Mapping network drives in Windows usually involves shuffling through Explorer menus. There is another way that I consider to be neater: using the Windows command line ("DOS" to some). The basic command for creating a mapping goes like this:

net use w: \\yourserver.address

To ensure persistence of the mapping across different Windows sessions, use this:

net use w: \\yourserver.address /persistent:yes

Here’s how to set up a mapping that logs in as a different user:

net use w: \\yourserver.address password /user:you

The above can include domain information as well and in a number of different forms: domain\username is one.

To delete a mapping, try this:

net use w: /delete

List all existing mappings:

net use

This is a flavour of what is available and Microsoft does provide documentation. Issuing the following command will bring some of that on the command line:

net help use